You can expect to find information like the time of the request, the requested resource, the response code, time it took to respond, and the IP address used to request the data. In the log columns configuration we also added the log.level and agent.hostname columns. The Apache access logs are text files that include information about all the requests processed by the Apache server. For example, because Apache logs are plaintext files, you can use cat to print the contents of an Apache log to stdout. But theres little essays which could be helpful to me. You can use Linux command line tools to parse out information from Apache logs. The indices that match this wildcard will be parsed for logs by Kibana. I read a the formal docs and wanna build my own filebeat module to parse my log.

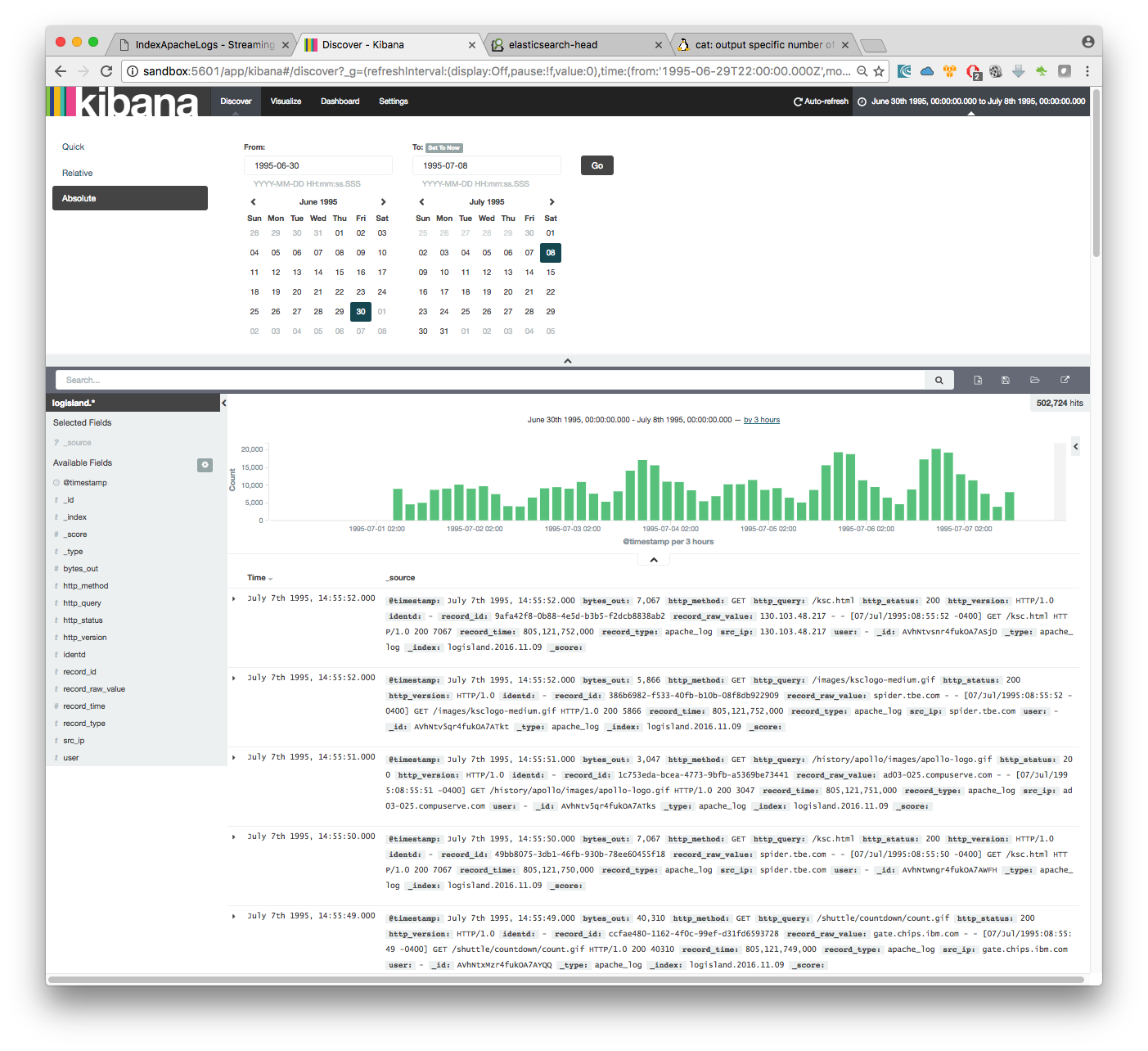

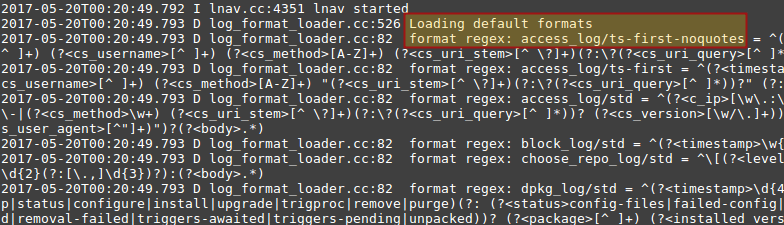

Check that the log indices contain the filebeat-* wildcard. This can be configured from the Kibana UI by going to the settings panel in Oberserveability -> Logs. ERROR : something went wrongįilebeat (and ElasticSearch's ingress) need a more structured logging format like this: logging : files : rotateeverybytes : 10485760įinally, the last thing left to do is configuring Kibana to read the Filebeat logs. I have setup an ELK stack and I am trying to parse squid log entries. By default, the Laravel logging format looks like this: local. Step-4: Shipping Logs to ELK stack using Filebeat. Using Filebeat, logs are getting send in bulk, and we don't have to sacrifice any resources in the Flare app, neat! Integration in Laravel This happens in a separate process so it doesn't impact the Flare Laravel application. It's a tool by ElasticSearch that runs on your servers and periodically sends log files to ElasticSearch. Every time something gets logged within Flare, we would need to send a separate request to our ElasticSearch cluster, which could happend hundreds of times per second. Original source by Maja Kraljic, J Modified by Joshua Wright to parse all elements in the HTTP request as different columns, December 16, 2019: import csv: import re: import sys: if len (sys. However, this synchronous API call would make the Flare API really slow. accesslog2csv: Convert default, unified access log from Apache, Nginx servers to CSV format. When something is logged in our Flare API, we could immediately send that log message to ElasticSearch using the API. It can also show you logs that are sent to ElasticSearch as part of the ELK stack. This isn't only used to manage the ElasticSearch cluster and its contents. It's rather straightforward use it too search our logging output too.ĮlasticSearch provides an excellent web client called Kibana. We decided to not use these services because we already are using an ElasticSearch cluster to handle searching errors. They provide a UI for everything you send to them. There are a couple of services out there to which you can send all the logging output. In this blog post, we'll explain how we combine these logs in a single stream. The only problem is that, whenever something goes wrong, we need to manually log in to each server via SSH to check the logs. This is quite helpful when something goes wrong. Finally, there are worker servers which will process these reports and run background tasks like sending notifications and so on.Įach one of these servers runs a Laravel installation that produce interesting metrics and logs. Reporting servers will take dozens of error reports per second from our clients and store them for later processing. We've got web servers that serve the Flare app and other public pages like this blog. Optional: If this is the first time you go to the Beats Data Shippers page, view the information displayed in the message that appears and click OK to authorize the system to create a service-linked role for your account.Flare runs on a few different servers and each one of them has its own purpose.In the left-side navigation pane, click Beats Data Shippers.

#Parse apache logs filebeats install

Step 1: Configure and install a Filebeat shipper

0 kommentar(er)

0 kommentar(er)